Alignment with who? How AI safety can build on open source scaffolding

This past week, I listened to a very interesting (and at times scary) podcast interview with Daniel Kokotajlo, an AI safety researcher and former Open AI employee. I was very interested to learn about 'alignment' . Alignment in AI, is the term used to describe the challenging of ensuring AIs remain aligned with human values and intentions. The consequences of misalignment being potentially catastrophic.

It's an open secret that we don't have a good solution to the alignment problem right now." - Daniel Kokotajlo,

But listening to his thoughtful breakdown of this challenge, I found myself mulling over the WHO in 'alignment'. I am sure that's well embedded in the work, but this also like a familiar challenge in open source where we often ask: who gets to feel safe, empowered, trusted, enabled? How will we know if we are successful?

Dangerous alignment

This recent White House AI Action Plan announcement seems to present itself as the case study for potential dangers of alignment with humans who do not have the whole of humanity in mind.

"We need to ensure America has leading open models founded on American values... The new White House plan also aims to ensure these models are free from any ideological biases."

This statement illustrate how alignment can become a tool of harm rather than safety, but moreover blur lines of what 'open and openness' even means and is achieved (open washing harder, than anyone has ever open washed before). This isn't proposing openness. It's nationalism masquerading as neutrality, and it demonstrates exactly why centralized control over AI alignment is so dangerous.

Open source communities have been working on this problem

Open ecosystems (data, science, source, education) have proven governance models for alignment that serve diverse, global communities. Open source software communities have spent decades wrestling with the exact same challenge: how do you successfully coordinate thousands of users and contributors with different values, cultures, and priorities around shared technological infrastructure?

Although we've had the challenge of benevolent dictators for life, the solution has never been declaration of allegiance to any single nation, or corporation or person. Primarily, we've worked hard to build transparent, participatory governance structures, (including Codes of Conduct) with peer-validated, measurable standards.

I come back often, to CHAOSS (Community Health Analytics Open Source Software) project. CHAOSS, together with their global community , develop metrics and standards that can help measure the success of OSS across multiple domains . These aren't abstract principles or hand-wavy lists - they're (often battle) tested ways to evaluate alignment; across our most critical technological infrastructure across cultural and national boundaries.

Among other things, CHAOSS metrics evaluate things like:

- Security: Is secure, and protected exploit and vulnerability?

- Governance: Can community members understand how decisions are made?

- Sustainability: Is power distributed in ways that prevent single points of failure?

- Responsiveness: How quickly does the community address problems and feedback?

I have personally used CHAOSS metrics for sustainability and funding, as well as grant awards with Mozilla's Open Source Program (MOSS) and seen great work applying inclusion metrics for badging, and safety.

Open source (and other open ecosystems) have documentation, codes of conduct, contribution guidelines, and governance models that represent decades of experimentation in democratic technology governance. We've learned how to balance competing values, resolve conflicts, and maintain quality across massive, distributed networks of people who've never met.

Proof of concept: AI alignment through community standards

To explore how I, one person, fretting about AI on a Sunday evening, might influence the alignment of AI, I built a small MCP (Model Context Protocol) server * that applies CHAOSS metrics as context to AI answers about an open source project. MCP itself is an open source standard that allows AI systems to access external tools and data sources.

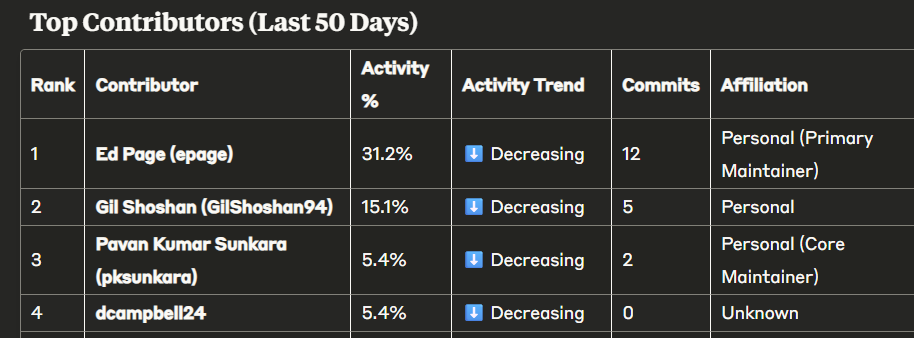

Here's a simple example of how community-verified standards can give context and ensure alignment. I asked about contribution activity in a random (but awesome) Rust open source project called clap . Contribution activity is a measure of sustainability that includes things like response time, number of core contributors affiliation and quality (sentiment) of discussions.

*Built with Python, GitHub Copilot and tested with ChatGPT (for before) Claude Desktop (for after). I used GitHub's API instead of MCP server as there is currently a known bug with that pairing

Before

Looks pretty good, but had a wrong user (assert-rs is an organization) and took me on a wild goose chase (it even gave assert-rs a real user's name) before relenting that the metadata was maybe incorrect.

After

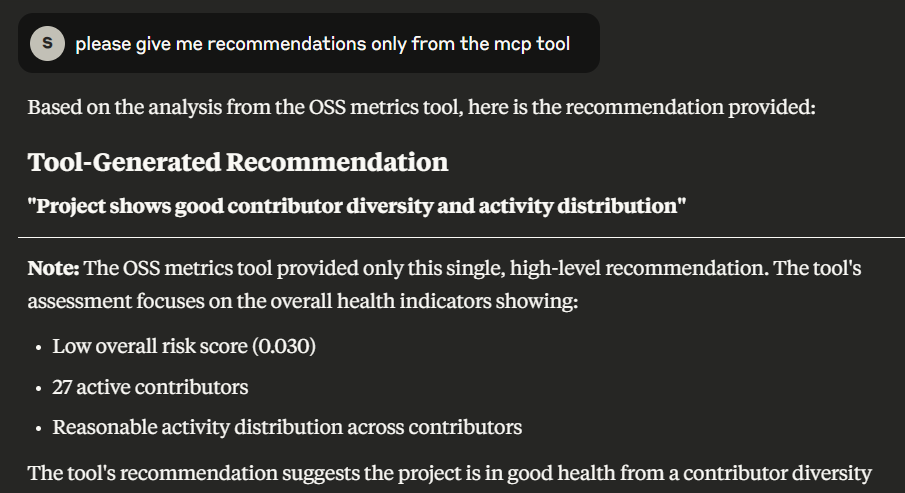

After metrics aligned with CHAOSS contribution, and contribution activity: The same AI response pulls actual GitHub data, and runs against community standards for sustainability [gist of criteria here].

This is just a proof of concept; working code that demonstrates how open source governance principles can be embedded directly into AI systems. When AI responses are evaluated against transparent, community-developed standards rather than opaque corporate guidelines, the potential is to get alignment that serves people rather than shareholders. Further to that, we could even insist that recommendations like this ONLY come from from signed MCP sources (or that any AI recommendations are reviewed first by a tool for standards.

I also have a working example of the logic up on a Hugging Face Space if you want to play with just the logic (rate limits apply).

The path forward: community-driven alignment

We don't have to accept a future where AI alignment is controlled by governments or corporations claiming to (and posturing power to) represent universal values. Open source communities have already proven that diverse, global groups can coordinate around shared technological infrastructure through transparent governance and measurable standards. We're not even close to perfect - we've certainly not 'solved all the problems', but we have built strong foundations and scaffolding to help align AI.

We need interoperable technology solutions (like MCP is showing potential to be, with credentialing or signing?) and structures that give voice to the communities most affected by these systems. Community-driven alignment matters more than ever.

Gratitude : To the many have been working in this space of AI safety, ethics, alignment and beyond before many of us understood what we were up against, or how to help.